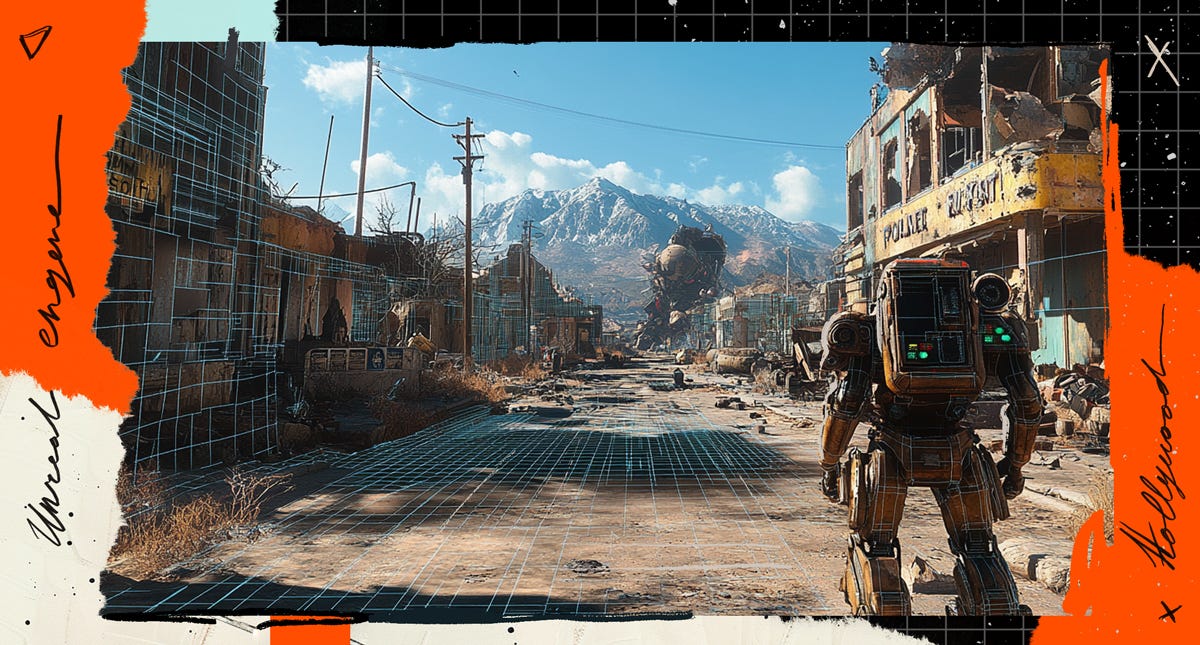

How Video Game Tech is Powering a Revolution in Hollywood

From the Mandalorian to Fallout, games tech is changing tinseltown. Oscar-winning visual effects supervisor Ben Grossmann and virtual production developer Johnson Thomasson tell us how.

Heads up! A16Z GAMES SPEEDRUN 004 applications are now open. If you didn’t already know, SPEEDRUN is our accelerator for startups at the intersection of Tech x Games. We invest $750k in each selected company.

Learn more and apply here.

Here at A16Z GAMES, we’re fascinated by the ways video game tech and tools are changing the world. Whether it’s NASA using Unreal Engine 5 to prep for its next moonshot, or the surprising ways AI is merging film and games, we think game technology is set to make the future more interactive than ever before.

We’re not the only ones with this vision. This week we spoke with Oscar-winning visual effects supervisor Ben Grossmann, who has used game engines and virtual reality tech to level up visual effects production on shows like Fallout and films like The Jungle Book and The Lion King.

We also spoke with Johnson Thomasson, who has been on the cutting edge of the deployment of tech like Unreal Engine-powered LED volume environments on shows like The Mandalorian and The Book of Boba Fett.

That story below. First, this week’s most futuristic news links:

News From the Future

📈 Steam Breaks Record With 37 Million Concurrent Players (PC Gamer)

2024 has been a boom year for PC gaming, with monster hits like Helldivers 2 and Palworld each driving over 10 million copies sold on Steam alone.1 The Steam platform as a whole was driven to new heights this week thanks in part to another breakout hit, Chinese-developed action game megahit Black Myth: Wukong.

🇩🇪 335,000 Attendees Visited Gamescom 2024 (GamesIndustry.biz)

More than 32,000 trade visitors and 1,463 exhibitors attended the event in Cologne, driving attendance up 4.6% compared to last year. Online viewership of the show’s associated events was way up as well, with 40 million viewers tuning into Opening Night Live, which exceeded expectations and doubled last year’s reach.

⚔️ Supercell’s Squad Busters Returns to Growth (MobileGamer.biz)

Analysts have been closely watching the performance of Supercell’s latest release as a bellwether for the health of the overall mobile gaming market. Daily revenue had been in steady decline, and hit a low in August, according to Appmagic estimates. But Squad Busters is now back to earning over $400k during weekend peaks.

🚰 Valve Officially Announces Its Next Big Game, Deadlock (The Verge)

The game has been an “open secret” for a few months now, with nearly 90,000 peak concurrent players playtesting the game over the weekend. Valve has stayed relatively quiet about the game—its Steam page explains simply that “Deadlock is in early development with lots of temporary art and experimental gameplay.”

How Video Game Tech is Powering a Revolution in Hollywood

For most of the 20+ years Ben Grossmann has worked in film and TV, he’s been trying to find ways to “speed up the creativity cycle.”

The visual effects supervisor and CEO of VFX production company Magnopus has worked on some of the most visually stunning films of this century, including Sin City, Alice in Wonderland, and Hugo, the latter of which won him an Oscar in 2012.2

Grossmann recalls his early days in Hollywood, when he first learned that visual effects take weeks or even months to render.

“It was super frustrating,” he says. “And so pretty much most of my career was spent trying to figure out how to speed up that process. I always felt like everybody we worked with would be better off if they could see what they were doing in real time.”

Further Reading: How AI Will Merge Film & Games

We believe we’re right around the corner from a next generation Pixar. Generative AI is enabling a foundational shift in creative storytelling, empowering a new class of human creators to tell stories in novel ways not feasible before. — A16Z GAMES’s Jonathan Lai

A Real-Time Technical Revolution

With his latest project, the blockbuster hit Fallout tv series on Amazon Prime, Grossmann is on the cutting edge of a revolution changing visual effects for film and TV: the implementation of real-time game engines like Unity and Unreal to do live, on-set and in-camera adjustments to VFX.

The flashiest and most-discussed way that game engine tech has infiltrated Hollywood in recent days is probably the use of LED Volume. This refers to giant wraparound screens capable of displaying real-time imagery, resulting in stunning backdrops for scenes that might otherwise have had to rely on green-screen technology and a much slower traditional VFX rendering process to get a similar effect.

Grossmann says this is a real revolution in the way visual effects are created, in part because real-time visual effects allow the people on set to all see how the final scene will look, without having to wait to get edited shots back from a traditional VFX department.

There has long been a burden caused by traditional, “baked” VFX, Grossmann says. “When people can't see everything, they're making bad decisions with partial information. When you're making things you can’t see until later, the quality of the story degrades because actors don't know where they are. They have to imagine it.”

With LED volume granting directors and actors the ability to see their background live and in real time, it feels more like the old Hollywood days of practical effects.

Except now, directors feel like they have superpowers.

Moving the Sun With a Flick of the Wrist

“It used to be in the old days, you had to figure out what you wanted so far in advance,” says Grossmann. “You had to commit to it, build it, and then that was it.” And it could become very time consuming if the director decided, for instance, to move the set around to shoot things from a different angle.

But when the environment is being rendered in a real-time game engine, it can be changed instantly.

“Say you want to move a building 15 feet to the left. You can just pick it up and move it, and add more plants or whatever it is that you want in that particular shot, Grossmann says. “It gives people superpowers over the physical world,” including things like lighting. “In a virtual set, you can just grab the sun and put it wherever you want and make adjustments like that. So you can rewind time and shoot that moment again.”

Lighting has traditionally been handled on Hollywood sets by a gaffer, sort of the chief on-set electrician. And that’s still the case on sets using LED volume tech. When Grossmann’s team at Magnopus is on set with a film crew, there’s a game engine specialist called a virtual gaffer who reports to the regular gaffer.

Grossmann explains: “So when the gaffer says, ‘I'm going to change these lights on the physical set,’ then the virtual gaffer says, ‘OK, I'm going to move those in sync with the virtual set.’ And a lot of times, we connect them directly so that when the gaffer makes changes to the physical lights on the set, it also automatically changes the virtual lights in Unreal Engine. So if they change the color temperature, it changes the color temperature. If they change the brightness, same thing. So you can connect all these things using the same controls.”

There are other ways that traditional film crews are able to use the tech to work in ways familiar and new. Grossmann gives the example of set decorators—people who would normally walk around the set moving props and adjusting the position of things relative to the camera.

These same people, he says, “can put on a VR headset, and you can have them inside the LED volume flying around moving things.”

It’s this confluence of technologies that’s driving the creation of something almost magical—a blurring of the lines between film worlds and game worlds.

“It's about giving physical people the ability to transport into a digital world and move back and forth between those two,” Grossmann says, “so they all appear to be one from the camera's perspective.”

A Burgeoning Technology

The introduction of game engine technology is still new, in the grand scheme of things. And if you talk to people who work in the visual effects space, they’ll tell you that a great deal of the credit for pushing things forward goes to by actor/director/producer Jon Favreau, in partnership with Lucasfilm-owned VFX house Industrial Light & Magic and Unreal Engine creators Epic Games.

Johnson Thomasson is a supervising technical director at visual effects house The Third Floor who has worked on Favreau-run shows like The Mandalorian and The Book of Boba Fett.

Thomasson says that “Favreau has really driven virtual production and the use of game engines in the filmmaking process. He comes from a line of filmmakers like Lucas and Cameron, who had a vision for what they wanted to create but also a vision for a better way of collaborating, enabled by new technology.”

The Mandalorian’s first season famously introduced the use of LED volume sets to create gorgeous, 3D real-time environments. For the show's second season, Thomasson led a team to develop a suite of pipeline tools that allowed the pre-vis department to work in Unreal Engine and share assets with other departments. (Pre-vis refers to a rough animated version of a film or TV show before it gets made—sort of like an evolved version of a storyboard).

“Favreau is unique in that he wants to see the entire episode done in pre-vis, including dialogue scenes,” Thomasson says. “A lot of shows we just come in for the complicated visual effects sequences. But in the case of The Mandalorian and Boba Fett and other shows in that universe, Favreau really wants to playtest an entire episode in pre-vis. He's been a big advocate of using game engine technology for this purpose because of its real-time nature.”

From Jungle Book to Megalopolis

One of the earliest films to really make use of game engines was the Favreau-directed The Jungle Book, in 2016, which used the Unity game engine. (And for which Ben Grossmann was visual effects executive producer.)

And 2019’s The Lion King took things even further.3

“Very little of that film was was actually captured in a physical camera,” Thomasson says, “but the way they worked was very intuitive for traditional filmmakers, where they had dollies and cranes and various things on a stage where the motion from those things was being captured and fed into a game engine and being rendered in real time. So they were sort of physically producing a movie, but in the virtual world.”

Thomasson says that in many ways, the production pipeline for movies being created in this way bears similarities to the production pipeline for video games.

“You have to build assets and effects. You have to have version control. You're still modeling, texturing and rigging characters and vehicles and props. And then you have environment artists who are laying out environments. Though usually they're working based on concepts and designs that came from the art department, which is led by the production designer on film.”

Thomasson’s most recent role was as Senior Unreal Engine Specialist on Francis Ford Coppola’s upcoming Megalopolis, an experience which saw him working closely with the legendary director of Apocalypse Now and the Godfather trilogy.

“I was brought on to facilitate exploring the the environments that were being built for visual effects as they were a work in progress,” Thomasson says, “so I would bring in the work that the visual effects vendor was doing into Unreal, and with Francis nearby, I could pilot around and show him this world that was being built and get his feedback in an interactive way. So I could immediately change something and he could say, yes, no, more or less.”

The Megalopolis VFX team ultimately used Unreal Engine not only for those real-time explorations, but also for a number of final VFX shots. “We really pushed what the engine was capable of in terms of render fidelity as far as we could take it,” Thomasson says.

Still, Thomasson says he expects game engine tech to keep making strides: “It's inevitable that Unreal is going to keep pushing the envelope in terms of rendering quality, which is what is demanded by visual effects for film,” he says.

“The immediate feedback of realtime graphics is so advantageous, but ultimately in film VFX we also have to be able to create photorealistic images.”

An AI-Powered Future for Film?

Ben Grossmann is still thinking about ways to speed up creativity on film sets. The next frontier, he says, may be driven by AI.

When it comes to actually generating the 3D models and art assets for virtual production, “we still have the challenge of having to build everything by hand,” Grossmann says.

Though there are alternatives—like Epic’s photogrammetry tools or asset libraries—these are only partial solutions for most filmmakers building out a set. “Epic has invested a lot of time and money in building out libraries of reusable plants and vegetation and things that look real, so people don't have to keep making trees over and over,” Grossmann says, “But for most things I’ve still got to go to a location, take a bunch of pictures, and convert those pictures into 3D models. That process takes a lot of time and effort.”

And it can be expensive—millions of dollars to create a virtual set.

The dream, then, would be for a director or film crew to be able to dynamically generate and adjust assets on the fly, “the same way a director would walk onto a set and ask for a performance from a human being.”

“I think this is going to be the next major revolution in content creation,” Grossmann says, “because what stops people from telling stories right now is the fact it costs millions of dollars to build the world your story takes place in.”

Grossmann sees the potential for generative AI to not only make professional production faster and more efficient, but to enable a sort of “new wave of accessibility” to immersive storytelling.

The dream, Grossmann says, is a future where “a kid in their basement can just create a story running on a gamer class graphics card.”

“We're right on the precipice of it, and it’s going to be a pretty explosive transition.”

🤿 How Dave the Diver Sold Over 4 Million Copies

In this episode of Win Conditions, we dive into the creative journey of Jaeho Hwang, the visionary Game Director behind the breakout hit, Dave the Diver.

Enjoy the full episode below:

💼 Jobs Jobs Jobs

There are currently over 100 open jobs listings across both the A16Z GAMES portfolio and our SPEEDRUN portfolio. For the freshest games industry jobs postings, be sure to follow our own Caitlin Cooke and Jordan Mazer on LinkedIn.

Join our talent network for more opportunities. If we see a fit for you, we'll intro you to relevant founders in the portfolio.

You are receiving this newsletter since you opted in earlier; if you would like to opt out of future newsletters, you can unsubscribe immediately.

This newsletter is provided for informational purposes only, and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisers as to those matters. This newsletter may link to other websites and certain information contained herein has been obtained from third-party sources. While taken from sources believed to be reliable, a16z has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation.

References to any companies, securities, or digital assets are for illustrative purposes only and do not constitute an investment recommendation or offer to provide investment advisory services. Any references to companies are for illustrative purposes only; please see a16z.com/investments. Furthermore, this content is not directed at nor intended for use by any investors or prospective investors, and may not under any circumstances be relied upon when making a decision to invest in any fund managed by a16z. (An offering to invest in an a16z fund will be made only by the private placement memorandum, subscription agreement, and other relevant documentation of any such fund which should be read in their entirety.) Past performance is not indicative of future results.

Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Content in this newsletter speaks only as of the date indicated. Any projections, estimates, forecasts, targets, prospects and/or opinions expressed in these materials are subject to change without notice and may differ or be contrary to opinions expressed by others. Please see a16z.com/disclosures for additional important details.

Sales estimate source: GameDiscoverCo

Grossman also won an Emmy for the show The Triangle in 2006

Favreau later pulled in Grossmann and his company, Magnopus, to level up virtual production on The Lion King. Magnopus was also involved with the LED stages on The Mandalorian.