Three Viral Experiments With AI Powered Games

We spoke to the creators of a viral AI Minecraft demo, a YouTuber who trained an AI to play Pokémon, and some hackers who are modding AI features into Skyrim

How will AI tech change video games?

It’s a hot question, and every few months a new possible answer emerges and takes the internet by storm. The latest example was just last Friday, when the AI startup Decart and chipmaking startup Etched caused a full blown uproar with their reveal of Oasis, a playable AI-generated take on Minecraft.

Oasis works by predicting and generating, pixel-by-pixel, what the model thinks the next frame ought to look like based on its training data (a combination of 100,000+ hours of licensed Minecraft footage and some associated keyboard and mouse input data). As a result, Oasis plays more like a strange, interactive dream than any game you’ve encountered before.

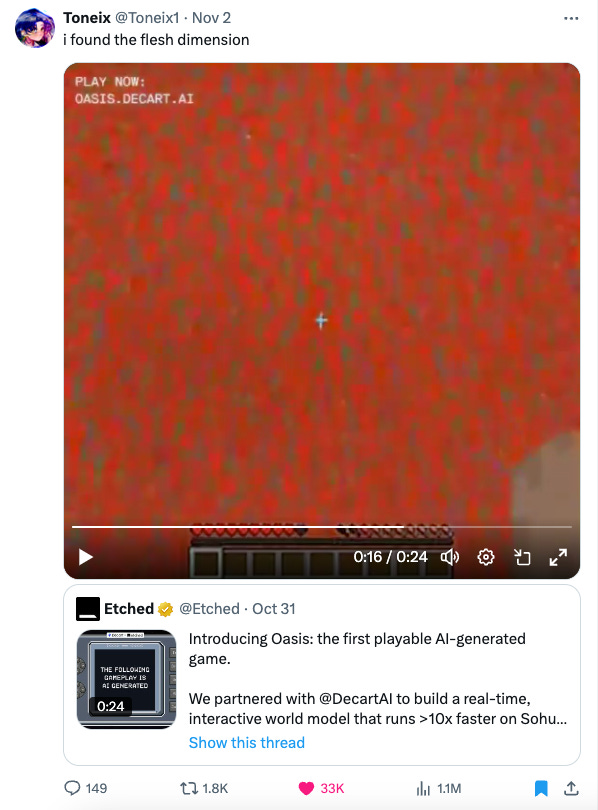

Because the model only holds a few seconds of environmental data in memory, you can teleport to a new randomly-generated location simply by looking at the sky for a few seconds. Players quickly caught onto this and began jokingly calling Oasis “dementia Minecraft.”

The memes and gameplay clips coming out of the Oasis demo are hilarious, but the technology is no joke. This week we spoke to the founders behind Oasis, as well as other leaders on the cutting edge of implementing AI tech in games, including a team that’s modding AI features into Skyrim and a designer creating cutting edge in-game AI companions.

That story below. But first, this week’s news from the future.

News From the Future

📽️ What if A.I. Is Actually Good for Hollywood? (The New York Times)

As this newsletter reported in August, Hollywood is eager to embrace AI. The New York Times reports that recent advances have “hinted tantalizingly at what A.I. could soon accomplish at IMAX resolutions, and at a fraction of the production cost.”

🤖 Netflix Appoints New VP of GenAI for Games (Pocket Gamer)

Netflix’s Mike Verdusaid he’s working to drive “a once in a generation inflection point” for game development and player experiences using generative AI. “I am focused on a creator-first vision for AI, one that puts creative talent at the centre, with AI being a catalyst and an accelerant,” he said.

🧙 Hogwarts Legacy 2 Coordinating ‘Big-Picture Storytelling’ With Harry Potter TV Show Storylines (IGN)

Last year’s Hogwarts Legacy game by Avalanche Software was a monster hit, with over 30 million units sold. Now owner Warner Bros. are calling the game’s sequel one of its “biggest priorities” as it looks to bring the unannounced follow-up to fans in the next couple of years. WB leadership is also hinting that the game’s storyline will tie into the narrative that will play out in the Harry Potter HBO TV show.

Three Viral Experiments With AI Powered Games

“We’re trying to get to a world where every single pixel is generated—a world where you don’t need to write code to see it on a screen.”

That’s Dean Leitersdorf, cofounder and CEO of Decart, the AI startup behind the Oasis demo. He sees the company’s viral AI Minecraft demo as just the first step toward unlocking what he calls “video as a real-time interface between machines and humans.”

But first, there are going to be some technical hurdles to get over.

On a call with A16Z GAMES Leitersdorf and his collaborator on Oasis, Etched cofounder and COO Robert Wachen, laid out what they see as the gap between the v1 tech that Decart and Etched just revealed and planned future versions.

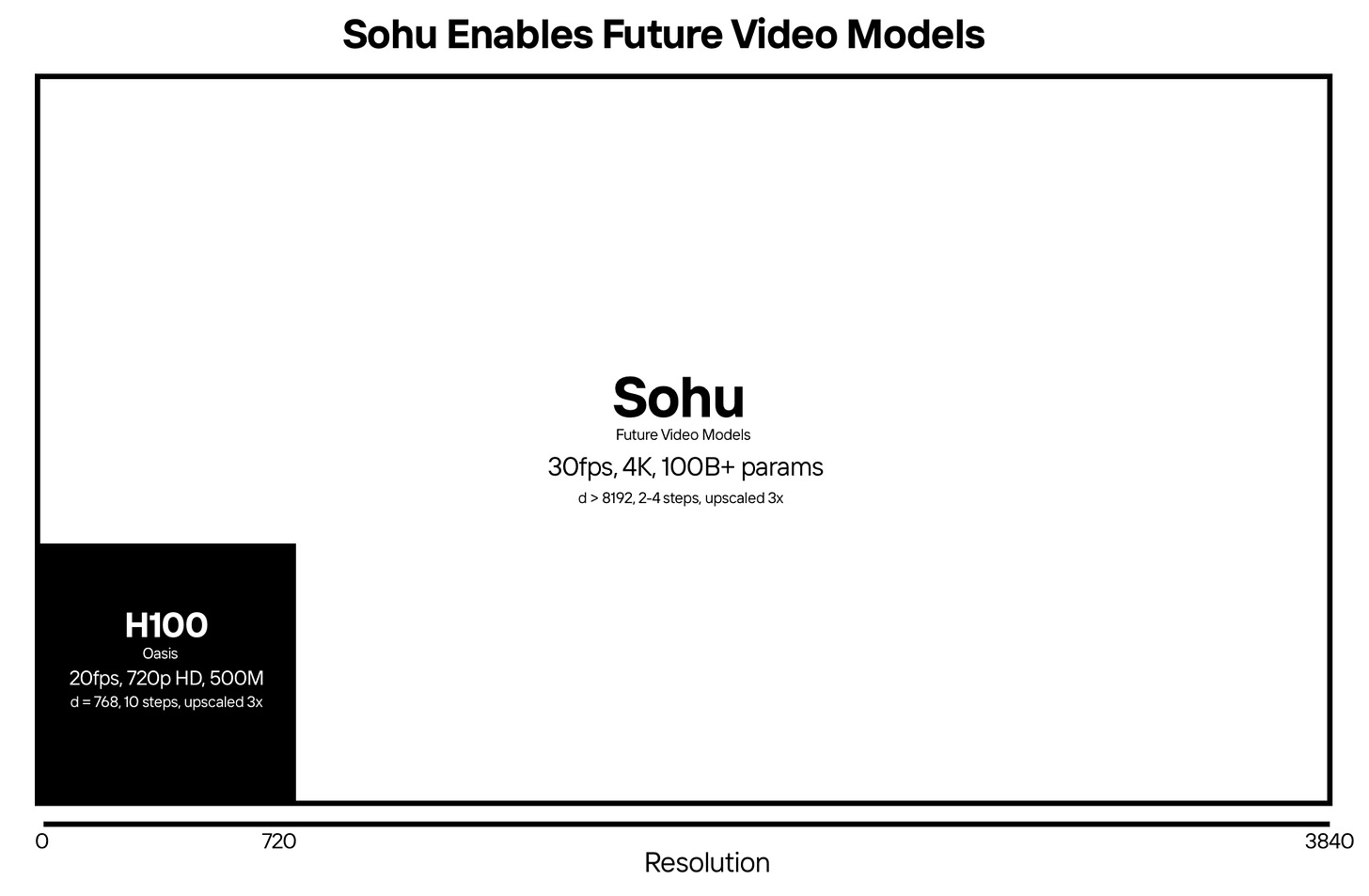

The current iteration of Oasis was powered entirely by Nvidia H100 GPUs, which limits both the number of users that can be served at any time as well as the resolution the game can run at, Leitersdorf explained. His hope is that they’ll see significant performance gains by switching to Etched’s “Sohu” chips in the future.

“The input size and the number of tokens that are being processed for videos is tremendous,” Wachen explains. “It's way more than text.”

Wachen and Leitersdorf both stress that they don’t see the future of the technology as relying on GPUs. “Dean and his team are some of the few people in the world that could get any video model running real time on GPUs,” Wachen says, “But pretty soon they'll be able to do that in high resolution at scale to millions and millions of users on our chips.”

Leitersdorf says that his long-term dream is a new type of gaming experience with video that responds to both visual and verbal prompts from users. With evolving video recognition technology, he argues, cameras could become a valid input method for this new, interactive video form.

“The other very important piece,” he says, would be the ability for users to “create worlds and others could experience them.” It’s a promising-sounding future, and one that users are flocking to try out: Decart announced that they’d reached over 1 million unique users in the first 79 hours after launch.

But for now, Wachen says, his team hopes their Minecraft model will inspire imaginations. “The main goal is just to get people thinking about what's possible.”

The future looks bright, but completely AI-generated experiences are just one branch of the AI tech tree being explored by creators at the moment.

Surprisingly inventive applications of AI to games are emerging in other places as well, including in games much older than you’d expect.

Training AI to Play Pokémon

One of the past year’s most viral videos about AI-powered games was Peter Whidden’s “Training AI to Play Pokemon with Reinforcement Learning,” which has racked up over 7 million views on YouTube.

Part of the video’s appeal is the pure visual spectacle it offers. “Right now we’re watching 20,000 games played by an AI as it explores the world of Pokémon Red,” Whidden explains, as an endless torrent of characters—each representing a single in-game run—streams across the screen.

But the real power of Whidden’s video is how deftly he explains his own process for creating and training his Pokémon-playing AI.

His video breaks down his entire process—including mistakes, limitations he encountered, and different approaches he tried—in a way that any intelligent high schooler could follow. The end of the video even shows interesting would-be AI programmers how to get started running similar experiments themselves.

The resulting attention on Whidden’s work was “definitely a bit overwhelming for a while,” he tells A16Z GAMES, “particularly when so many people with a wide range of experience were all interested in using and contributing to the code.”

One of the more fascinating aspects of the video were the emergent behaviors Whidden’s AI came up with in its attempts to beat the game. For instance, the AI came to dislike losing so much that if it lost a battle, it would simply refuse to play the game anymore.

“In one part of the analysis I note that the agent seems to have a preference for moving counterclockwise around the edges of the map,” Whidden says. “I speculated that this might be a heuristic that's helpful for navigation with limited memory and planning.”

But Whidden was “stunned,” he says, when he received a comment from a firefighter who informed him that they used a similar approach when entering buildings with poor visibility—sticking to walls in one direction before switching in order to exit. “I really didn't expect to see an abstract behavior learned in the game validated by something in the real world like that,” Whidden says.

Whidden wound up creating a Discord server that now serves as a budding community of people experimenting with AI-powered Pokémon experiments. Whidden says his next video will likely highlight some of the progress his community has made. “It quickly became something much larger than myself, and it's amazing to see how much further people have taken it,” he says.

In the meantime, Whidden says he’s spent much of the last year working on a new project that was “partially inspired by the response to the Pokémon video,” and that he’ll be posting videos about that once it’s ready.

Cobbling Together AI Companions in Skyrim

Gamers have long ago learned to shrug off repetitive dialogue and limited conversations with non-player characters (NPCs), but what if you could use a large language model to turn NPCs into more fully fleshed out characters capable of sustaining real conversations?

That’s the idea behind the ChatGPT-powered Skyrim mod called Herika.

Reece Meakings, the co-creator of the Herika mod, says the initial versions of Herika were the result of a side project to test out integrations between the OpenAI API into Skyrim. “The original mod really only summarized books and would make random comments about your location,” Meakings says, but it would quickly expand.

The mod’s original creator, who goes by tylermaister, soon published an update that allowed users to speak with Herika using a chatbox and gave her a voice using Azure text-to-speech. “This update blew my bloody mind,” Meaking says. “I was in the Windhelm Inn and I just spent an entire hour having a real time ‘human’ conversation with a video game character!”

Meakings linked up with tylermaister, and for the past year they’ve been collaborating on an ever-expanding set of features for Herika. “I can safely say for both of us this has been one of the most important things we have worked on,” Meakings tells A16Z GAMES.

Piece by piece, an astonishingly advanced and convincing set of AI companion features have been added to the mod. Players can now speak with Herika directly using their voice thanks to speech-to-text support, and she remembers all of her conversations with the player. She’s also been given the ability to “see” the game world around her, allowing her to navigate the world and function effectively as a true “player 2” in Skyrim adventures.

The latest versions of the mod have gone further by introducing a feature Meakings calls “sapience,” a sort of local area ring around the player that automatically activates AI features in any NPC surrounding the player character. “You can easily walk up to any person in the game and have a chat instantly,” Meakings says, and even when you’re not interacting with them directly, the AIs have "radiant conversations” amongst themselves. “You can sit in a bar and just listen to the NPCs banter amongst themselves,” he says.

Still, Meakings says, the team is beginning to run up against some theoretical limits for how Skyrim could feasibly integrate AI features. For instance, many in-game requests intentionally funnel players into specific actions and conversation trees, and while you can have other conversations with those NPCs, that won’t necessarily help you progress through the game.

“You still need to play out the ‘regular Skyrim’ quest stages to actually complete the quest,” Meakings explains. “This is just one of the issues with building on top of existing games as its hard to ‘fully’ integrate all actions to be accountable by the AI system.”

Truly immersive worlds with AI-powered NPCs, then, may need to be designed with the tech in mind from the beginning.

But in the meantime, we’re getting some truly unbelievable glimpses of the future.

That’s it for this week. Want more pieces like this one, or have questions that you’d like to see A16Z GAMES tackle?

Write in to us at games-content@a16z.com

🌴 LA Tech Week is a Wrap!

Thanks to everyone who joined us for LA Tech Week! Special shout out to AppsFlyer’s Brian Murphy, Mavan’s Matt Widdoes, Riot Games’s Cody Christie, and moderator Jen Donahoe (of Deconstructor of Fun fame) for joining our own Doug McCracken to chat about “Taking Your Game From Zero to One Million.”

💼 Jobs Jobs Jobs

There are currently over 100 open jobs listings across both the A16Z GAMES portfolio and our SPEEDRUN portfolio. For the freshest games industry jobs postings, be sure to follow our own Caitlin Cooke and Jordan Mazer on LinkedIn.

Join our talent network for more opportunities. If we see a fit for you, we'll intro you to relevant founders in the portfolio.

Not gonna lie, I’m torn on this AI gaming stuff. On one hand, it’s super innovative. On the other, it kinda looks like we’re losing the soul of game design. https://geometrydashspam.com/

Hell yeah! More of this. I would love to hear about any indie game attempts, successful or not, that use llms in any way.